- AI Models

- February 27, 2023

Make-A-Video: Transforming Text Prompts into Dynamic Video Clips

Meta AI has unveiled Make-A-Video, an innovative AI system that empowers users to transform text prompts into captivating video clips. Leveraging the advancements in generative technology research, Make-A-Video opens new horizons for creators and artists by providing a seamless and efficient tool for content generation. By combining paired text-image data and video footage, Make-A-Video learns to perceive the world visually and comprehend motion, leading to the creation of unique, high-quality videos.

Technical Details

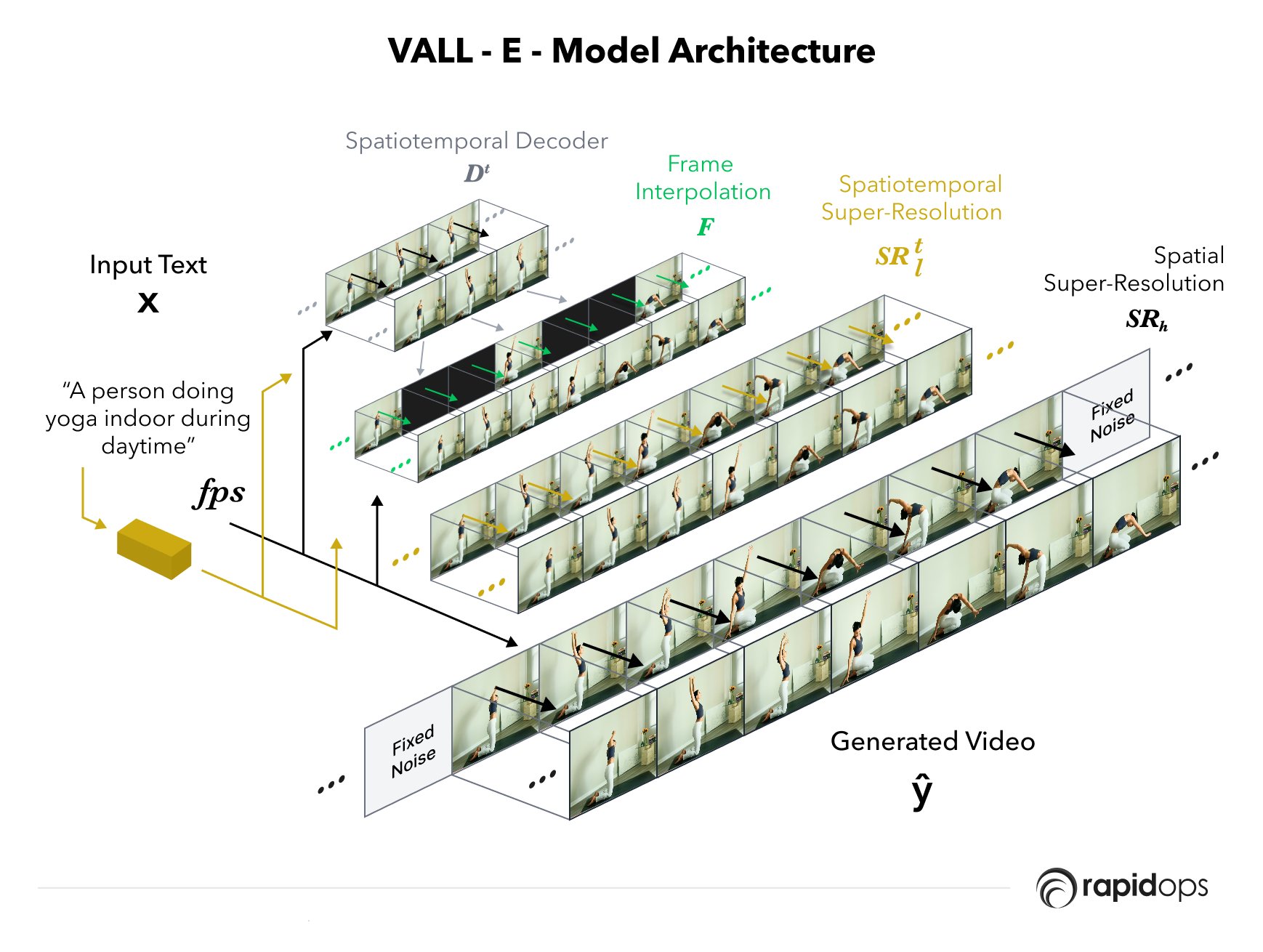

Meta Make-a-Video is an advanced deep-learning model with over 100 billion parameters, positioning it as one of the largest and most complex models ever developed. Through the utilization of self-supervised learning, the model learns to predict missing information from data without relying on explicit labels. Its training dataset encompasses a vast collection of over 100 billion text-image pairs and over 100 million video-only clips. By leveraging this extensive dataset, Make-a-Video gains a comprehensive understanding of the intricate relationship between text prompts, images, and video content. With remarkable versatility, the model is capable of generating videos in diverse resolutions and frame rates, reaching up to 4K resolution and 60 frames per second.

Capabilities

Meta Make-a-Video offers the following capabilities:

- Content Creation With Make-a-Video, users can create diverse forms of content, such as short films, music videos, and commercials. The model's ability to generate high-quality video content from text prompts opens new avenues for creative expression.

- Personalized Experiences Make-a-Video allows for the generation of personalized experiences tailored to individual users. By providing text prompts related to their preferences or interests, users can receive customized video content that reflects their unique vision.

- Exploring New Ideas The model serves as a tool for exploring and visualizing new ideas. For example, writers can input text prompts about characters they are developing, and Make-a-Video can generate videos showcasing these characters in action, aiding in the creative process.

Limitations

While Make-A-Video represents a significant advancement in generative AI technology, it is important to acknowledge certain limitations:

- Contextual Understanding Make-A-Video relies on text prompts to generate video clips, which means its understanding of context is constrained by the provided text. It may struggle to fully comprehend complex or nuanced prompts, leading to potential inconsistencies or misinterpretations in the generated videos.

- Fine-Grained Control Although Make-A-Video offers users the ability to input text prompts, the level of control over specific visual elements or scene composition may be limited. Users may find it challenging to precisely manipulate the details and aesthetics of the generated videos, hindering their ability to achieve the desired creative vision.

- Training Data Bias Like other generative AI models, Make-A-Video's training data plays a crucial role in shaping its output. If the training data contains biases or limitations in terms of diversity, representation, or cultural context, the generated videos may inadvertently reflect those biases. It is important for users to be mindful of potential bias and take steps to address it during the creative process.

- Complex Real-World Scenes Make-A-Video's ability to generate visually appealing videos relies on its understanding of the world from paired text-image data and video footage. However, it may face challenges when processing highly complex or dynamic real-world scenes that are not adequately represented in its training data. This could lead to potential limitations in generating realistic or coherent videos in such scenarios.

- Ethical Considerations As with any generative AI system, the ethical use of Make-A-Video remains a crucial consideration. The technology should be utilized responsibly, avoiding any misuse or generation of content that could potentially infringe upon intellectual property rights, and privacy or propagate harmful narratives.

Use Cases

Make-a-Video has several use cases across different industries and domains, including:

- Entertainment and Media Make-a-Video can revolutionize the entertainment industry by automating the production of video content. It can generate visually captivating trailers, teasers, and promotional videos, saving time and resources for filmmakers and production houses.

- Advertising and Marketing Marketers can utilize Make-a-Video to create engaging video advertisements for products and services. The model can transform text-based marketing campaigns into visually appealing video ads, capturing the attention of target audiences.

- Gaming Make-a-Video can enhance the gaming experience by generating dynamic cutscenes, character animations, and virtual environments. It enables game developers to create immersive worlds and narratives quickly.

- Education and Training In the field of education, Make-a-Video can be used to develop interactive learning materials, animated presentations, and instructional videos. It offers a novel approach to engage learners and communicate complex concepts effectively.

Frequently Asked Questions

What is the use of Make-a-video?

Is Make-a-video open-source?

Is Make-a-video free to use?